Back to ComfyUI Hub

Image Editing

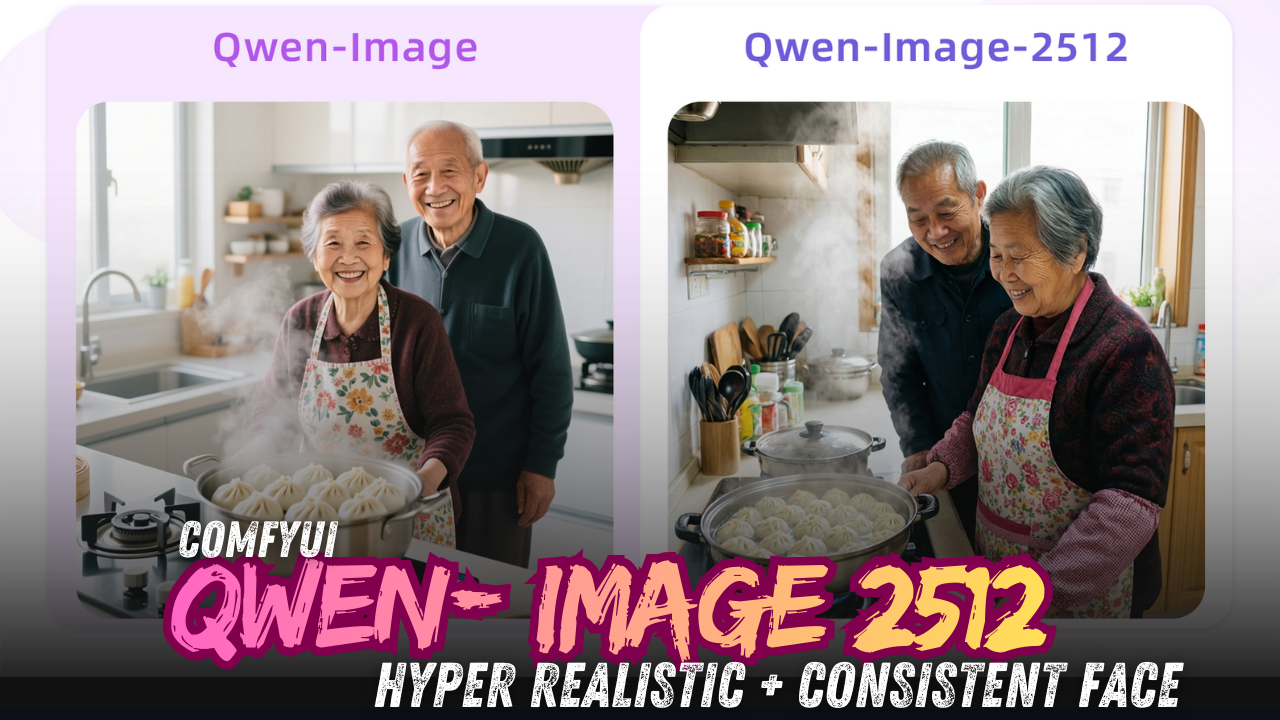

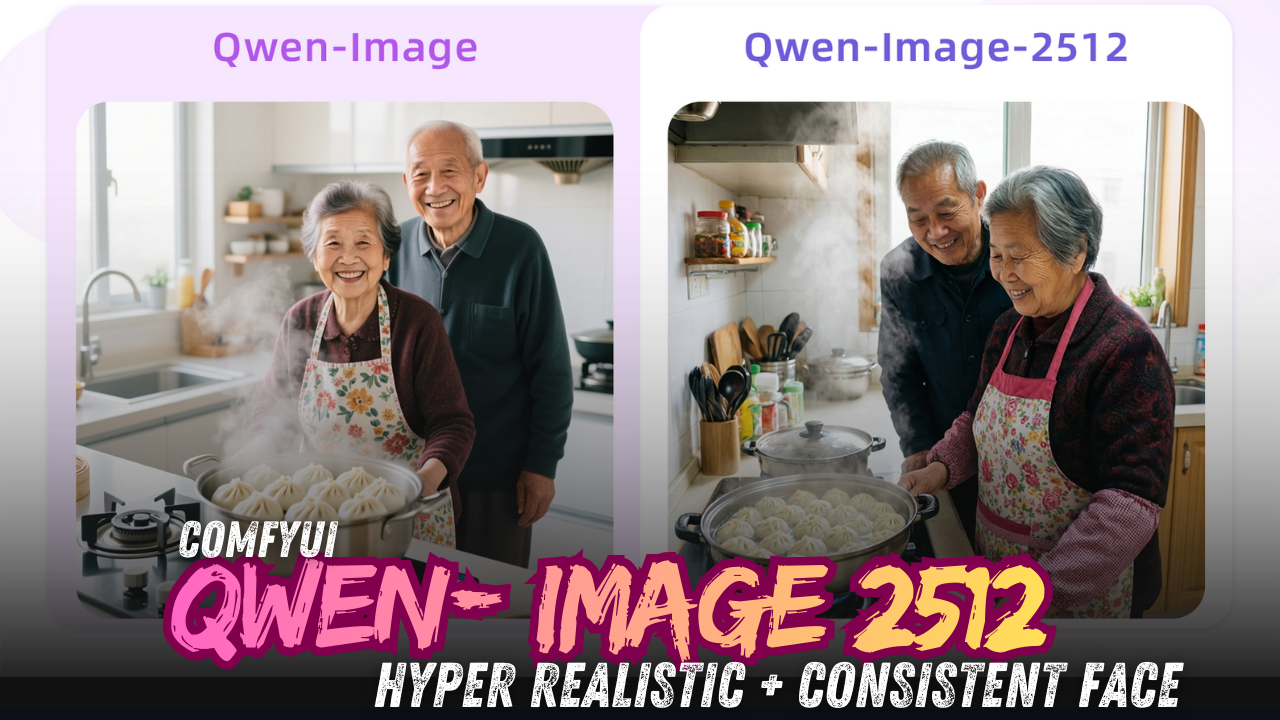

Qwen Image Edit 2512

Instruction-based image editing using Alibaba's Qwen2.5-VL model (December 2025 update). Enhanced human realism, natural details, and improved text rendering.

Required Models

Text Encoder

Qwen 2.5 VL 7B Text Encoder (FP8)

Vision-Language model for understanding text prompts and image context. Required for instruction-based editing.

Diffusion Model

Qwen Image Edit 2511 Diffusion Model

The core diffusion model for image editing. Performs the actual edits based on prompts and reference images.

VAE

Qwen Image VAE

Variational Autoencoder. Encodes images to latent space and decodes results back to pixels.

LoRA

Qwen Image Edit Lightning LoRA (4-Step)

Distilled LoRA for faster generation. Reduces required steps from 20-40 down to just 4, with minimal quality loss.

Model Placement

📂 ComfyUI/ ├── 📂 models/ │ ├── 📂 text_encoders/ │ │ └── qwen_2.5_vl_7b_fp8_scaled.safetensors │ ├── 📂 loras/ │ │ └── Qwen-Image-Edit-2511-Lightning-4steps-V1.0-bf16.safetensors │ ├── 📂 diffusion_models/ │ │ └── qwen_image_edit_2511_bf16.safetensors │ └── 📂 vae/ │ └── qwen_image_vae.safetensors

KSampler Settings

| Mode | Steps | CFG |

|---|---|---|

| Standard (Best Quality) | 40 | 4.0 |

| Balanced | 20 | 4.0 |

| Lightning LoRA | 4 | 1.0 |

Workflow Preview

Usage Tips

- Use image1 as your source image to edit.

- Use image2 as an optional reference (e.g., texture, style).

- Describe your edit in natural language: "Change the furniture to the fur material in image 2."

- Lightning LoRA is recommended for faster iteration (4 steps).